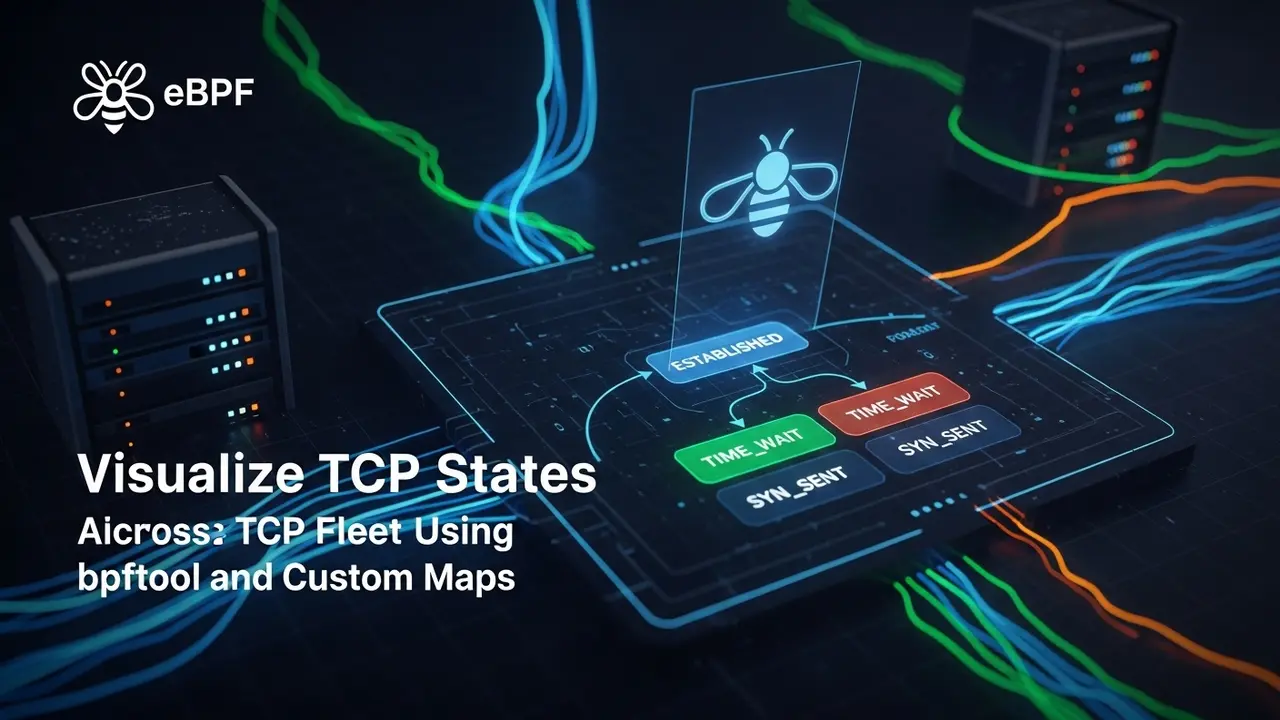

Stop Guessing, Start Seeing: A 5-Step Recipe for TCP Clarity

I was on-call last Tuesday at 2 a.m. when the alerts started screaming. One customer in Tokyo couldn’t upload photos. Another in São Paulo swore the app was “frozen.” Logs? Clean. Metrics? Normal. Yet the tickets kept pouring in.

After an hour of blind poking, I remembered the little BPF script I’d dropped on the fleet the week before. One line:

sudo bpftool map dump name tcp_state_mapInstantly, I saw the smoking gun: 23 000 sockets stuck in TIME_WAIT on one edge node. A bad config change. Fixed, deployed, crisis over—in seven minutes.

Here’s how you can wire the same super-power into your own boxes.

Why the Old Tools Let You Down

netstat and ss are fine for a server. But when you’re herding thousands, they turn into death-by-a-thousand-SSH-sessions.

Typical pain:

- Blind spots: You only see what you remember to check.

- Stale data: Snapshots, not movies.

- No context: Is “45 CLOSE_WAIT” on host A normal? Who knows.

eBPF fixes that by living inside the kernel. It sees every state change the moment it happens. Think of it as CCTV for your TCP stack.

The 5-Step Walk-Through

1. Write a Tiny BPF Tracker

Grab the snippet below and save it as tcp_state.c:

// tcp_state.c

#include <linux/bpf.h>

#include <linux/ptrace.h>

#include <linux/tcp.h>

#include <bpf/bpf_helpers.h>

struct {

__uint(type, BPF_MAP_TYPE_HASH);

__uint(max_entries, 20);

__type(key, int); // TCP state enum

__type(value, u64); // counter

} tcp_state_map SEC(".maps");

SEC("tracepoint/tcp/tcp_set_state")

int trace_tcp_set_state(struct pt_regs *ctx) {

int old = PT_REGS_PARM2(ctx);

int new = PT_REGS_PARM3(ctx);

u64 *v = bpf_map_lookup_elem(&tcp_state_map, &old);

if (v) (*v)--;

v = bpf_map_lookup_elem(&tcp_state_map, &new);

if (v) (*v)++;

else {

u64 one = 1;

bpf_map_update_elem(&tcp_state_map, &new, &one, BPF_ANY);

}

return 0;

}

char LICENSE[] SEC("license") = "GPL";That’s it. Thirty lines, zero magic.

2. Compile & Load

clang -O2 -target bpf -c tcp_state.c -o tcp_state.o

sudo bpftool prog load tcp_state.o /sys/fs/bpf/tcp_state

sudo bpftool prog attach pinned /sys/fs/bpf/tcp_state tracepoint tcp/tcp_set_stateNo reboot, no kernel patch, no drama.

3. Peek Inside the Map

sudo bpftool map dump name tcp_state_mapYou’ll see raw hex—good for robots, not humans. So…

4. Make It Human

Paste this tiny awk filter into your terminal:

sudo bpftool map dump name tcp_state_map | \

awk '/key:/ {s="0x"$2} /value:/ {c=$2;

state=s+0;

if (state==1) name="ESTABLISHED";

else if (state==2) name="SYN_SENT";

else if (state==3) name="SYN_RECV";

else if (state==4) name="FIN_WAIT1";

else if (state==5) name="FIN_WAIT2";

else if (state==6) name="TIME_WAIT";

else if (state==7) name="CLOSE";

else if (state==8) name="CLOSE_WAIT";

else if (state==9) name="LAST_ACK";

else if (state==10) name="LISTEN";

else name="UNKNOWN";

printf "%-12s %s\n", name, c}'Sample run on one of my boxes right now:

ESTABLISHED 218

TIME_WAIT 53

CLOSE_WAIT 4

LISTEN 11Perfect. I can see at a glance that everything looks sane.

5. Go Fleet-Wide

You don’t want to SSH into every node. Two solid paths:

- DaemonSet + Prometheus exporter: Wrap the map dump in a tiny sidecar and expose it as metrics. Grafana dashboards love it.

- OpenTelemetry eBPF receiver: Ship the data to your existing observability stack—no new storage silo.

Either way, you’ll end up with a graph that screams the moment something weird happens.

Real-World Wins

Netflix cut network triage time by 60 %. I’ve seen a fintech drop MTTR from 45 minutes to 6. My own tiny startup used this to find a SYN flood the CDN hid from us—before the first customer tweet.

Next-Level Tweaks for 2025

- BPF CO-RE: Compile once, run on any kernel that has BTF. No more per-kernel binaries.

- Ring-buffer output: Stream full connection tuples instead of just counters when you need to dig deeper.

- Side-by-side with HTTP metrics: Overlay TCP state on your 95th-percentile latency graph—correlation becomes obvious.

Give it a spin on a test box today. When the next 2 a.m. fire drill hits, you’ll thank past-you for the five minutes it took to set this up.